- Underwater docking

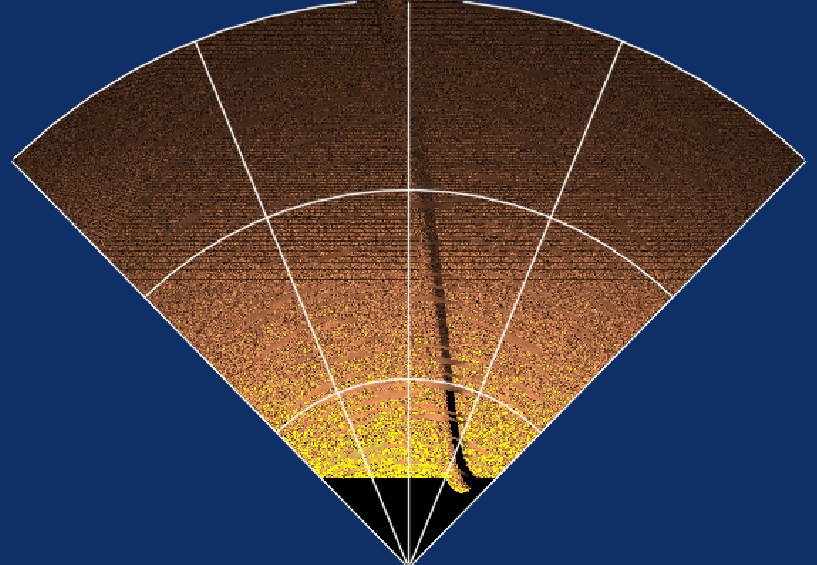

- Classification of sonar data with deep learning

- Vehicle system performance optimisation

- Hydrobatics simulator

- Autonomous underwater perception

- Underwater navigation

- Robust, flexible and transparent mission planning and execution

- Multi-agent mission planning and execution

- Air-independent energy storage

- Autonomous situation awareness and world modeling

- Underwater communication

- Networking

- Demonstrator program

Description

The aim of this project is to develop perception algorithms for autonomous underwater applications. For example, machine learning and 3D modeling algorithms applied to the sensed data from cameras, sonar and chemical sensors. These will provide a higher-level representation of the environment that is suitable for autonomous decision making.

We are developing the algorithms for automatic classification and modeling of sensor signals from a sensor suite. The integration with positioning information from SLAM and other sensors is be integral to the high-level representations. This will produce modules that will be integrated and demonstrated on the Maritime Underwater Robots to produce both classifications and 3D models in real time.

For example our work has shown that sdescan sonar can be used to iteratively refine the AUV pos and the bathymetric map of the the seafloor by using methods such as neural rendering of the sonar signal strength.

People

John Folkesson, assoc. professor Robotics, Perception and Learning – Project leader

Li Ling – PhD student

Jun Zhang – Post-Doc